The Evolution of the Open Web

Developers have been pitched Blockchain technology for many years based on a hand-wavy set of “use cases” which are tied to unclear definitions of how the technology works, what it’s actually good for and how the platforms that employ it are meaningfully different from each other. This has justifiably created both confusion and skepticism.

In this post, I want to break through that fog and provide a set of clear mental models for understanding how potential use cases drive the technical tradeoffs that each platform has been forced to make. These mental models derive from the progress that the technology has made over the past 10 years through three distinct generations from Open Money to Open Finance to Open Web.

My goal is that you leave with a much clearer and more concrete understanding of what blockchain technology is, what different platforms are good for and how the future might unfold.

A Quick Blockchain Primer

To cover the obligatory basics, a blockchain is essentially just a database that is run by a group of different operators rather than a single entity (like Amazon or Microsoft or Google). This gives it powerful properties because, unlike today’s clouds, you no longer have to trust the “owner” of the database (or their operational security) to maintain high value data. When the blockchain is public (as the largest ones are), anyone can use it for anything.

To enable this system to run across a wide range of potentially anonymous machines around the world, the system must have a digital token which facilitates the payment from users of the chain to the operators of the system (and provides security guarantees baked into the game theory behind it). Though it was co-opted by the misguided ICO boom in 2017, the core idea of “tokens” and of “tokenization” in general — that a single digital asset can be uniquely identified and transferred — is incredibly powerful.

It’s also important to separate the database portion which stores the data from the layer that actually modifies the data (the virtual machine). These are usually tied together to a degree because the technical properties of the database layer dictate what’s possible to do with the modification logic but each of them separately drives the higher level properties of the system.

The chain can be optimized along several dimensions, for example security (think Bitcoin), speed, cost or scalability. The modification logic on top of this can also be optimized along several dimensions — it could be a simple “add/subtract” calculator (like Bitcoin) or a fully Turing complete virtual machine (like Ethereum or NEAR).

So it is actually possible that two blockchain-powered platforms tune the underlying blockchain and VM to drive completely different functionalities and they might never compete with each other in the market. For example, Bitcoin is in a different world from Ethereum or NEAR which are totally separate from Ripple or Stellar, despite all being run using “blockchain technology.”

The 3 Generations of Blockchain

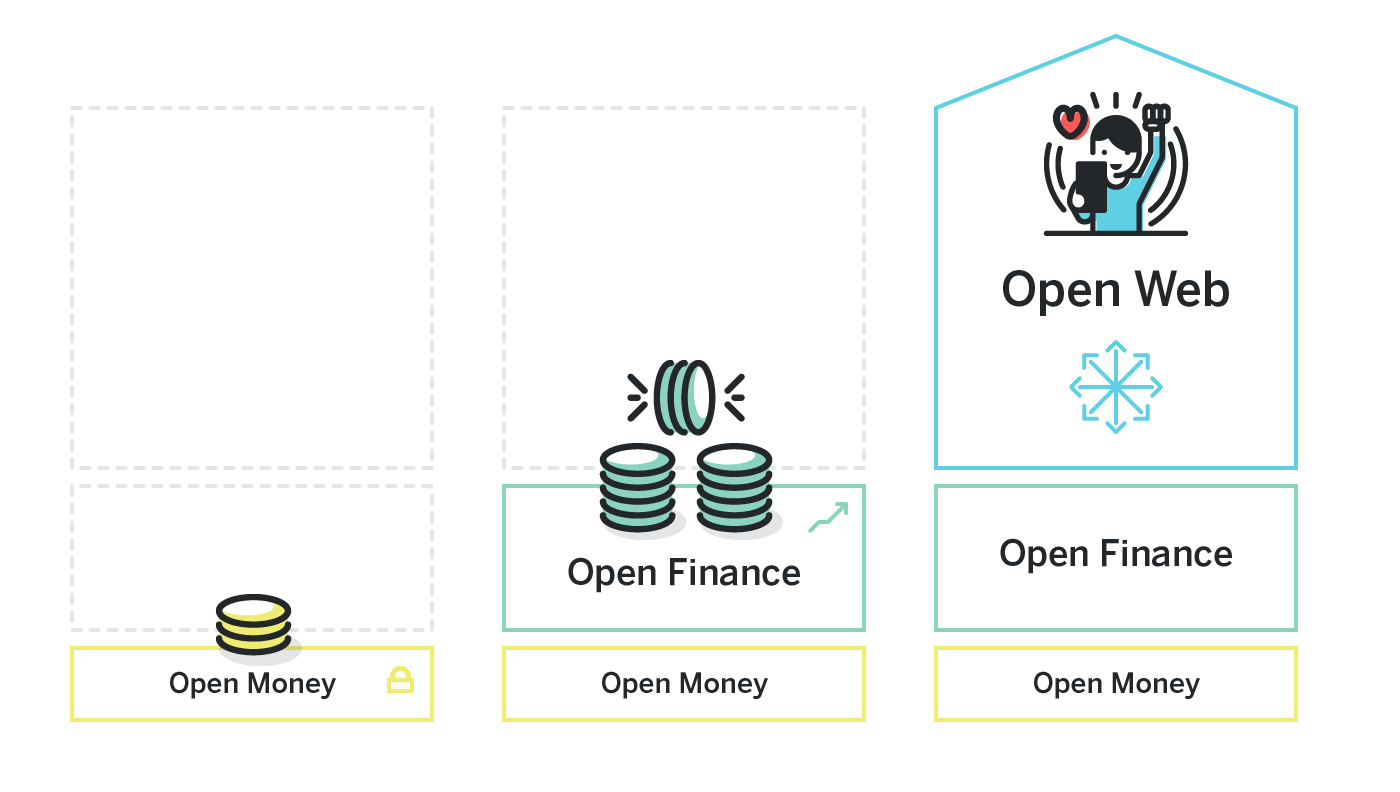

Both technological advances and specific system design decisions have driven blockchain’s expanding functionality during the 3 generations of its development, which occurred over the last 10 years. These generations can be grouped in the following way:

- Open Money: Give anyone access to digital money.

- Open Finance: Apply programmability to multiply the capabilities of that Open Money.

- Open Web: Expand the scope of Open Finance to encompass all high value data and bridge it to consumer-scale.

Let’s start by examining Open Money.

First Generation: Open Money

Money is the basic building block of capitalism. Step one allowed anyone anywhere to have access to money.

One of the highest value pieces of data you can store in a database is money itself. That’s the innovation of Bitcoin — to provide a simple shared ledger that allows everyone to agree that Joe owns 30 BTC and just sent Jill 1.5 BTC. Bitcoin was tuned to optimize for security above all else. Their consensus is enormously expensive, time-consuming and bottlenecked while their modification layer is essentially just a “plus/minus calculator” which allows the transfers of balances and a few other tightly scoped operations.

Bitcoin is a good training tool because it illustrates the fundamental benefits of data stored on a blockchain: it is independent of any single third party and can be operated on permissionlessly (one holder of BTC can initiate a p2p transfer to another account without asking anyone).

Because of the simplicity and power of Bitcoin’s promise, “money” has been one of the earliest and most successful initial use cases for blockchain. But it turns out that people have many different ways that they want to use money so Bitcoin’s “extra slow, extra expensive, extra secure” tuning works well for storing value as if it were gold but doesn’t work well for uses like rapid e-commerce payments or cross-border transfers.

Tuning Open Money

For these other use cases, other chains have emerged and tuned their parameters accordingly:

- Transfers: if you want to allow a million people to send occasional amounts of money around the world every day, you need something that’s much more performant and less expensive than Bitcoin but still maintains a healthy amount of security. Ripple and Stellar are two projects that have tuned their chains for this use case.

- Rapid transactions: if you want to allow a billion people to spend digital money like they do with credit cards, you need to further tune the chain to be massively scalable, massively performance and low cost. To do this, you generally have to make some sacrifices along the dimension of security. There are two approaches to this. First, build a faster “layer 2” on top of Bitcoin which optimizes for fast performance but then stores the assets back in the Bitcoin “vault” when transactions are complete, like Lightning Network. Second, build a new blockchain which provides as much security as possible while still allowing for fast, cheap transactions, like Libra.

- Private transactions: If you want to ensure that no one can infer who sent what to who, you would need to add a layer that anonymizes transactions, which sacrifices performance and adds to cost. Zcash and Monero have done this.

Because the token that represents this money is actually a fully digital asset, it can also be programmed. This can occur at the root level — for example, the total amount of Bitcoin that will ever be produced is coded into the core Bitcoin system — or it can be taken to a whole other level by having the right computing system on top of it.[1]

This is where Open Finance comes in.

Second Generation: Open Finance

With Finance, Money is no longer just a store of value or transactional asset — it can be operated on in useful ways which multiply its potential.

Things get far more interesting for digital money when you realize that the same properties which allow people to permissionlessly initiate transfers of Bitcoin also allow developers to write programs that do the same thing. In this context, think of this digital money as having its own independent API which doesn’t require any company to provide you with an API key and Terms of Use contract.

This is the promise of “Open Finance”, also known as “Decentralized Finance (DeFi)”. And it’s where we again hit the issues of technological progress and platform tuning.

Ethereum

As mentioned above, Bitcoin’s API is quite simple and non-performant. This makes it possible to deploy scripts to the Bitcoin network which can move Bitcoin around but, to do anything more interesting, you generally need to move the Bitcoin itself to another blockchain platform (which is a nontrivial operation).

Other platforms have worked to marry the security necessary to handle digital money with a more complex modification layer. Ethereum was the first to offer this. Instead of the “add/subtract” calculator of Bitcoin, Ethereum created a full virtual machine on top of the storage layer which allowed developers to write full programs and run them on the chain natively.

This is important because the security of the digital asset (eg money) that is stored on the chain is only as good as the security and robustness of the programs which can natively modify its state. Ethereum’s “smart contract” programs are essentially serverless scripts that run on the chain the same way that a vanilla “send Jill 23 tokens” transaction runs on Bitcoin. Ethereum’s native token is “Ether” or “ETH”.

Blockchain Components as Plumbing

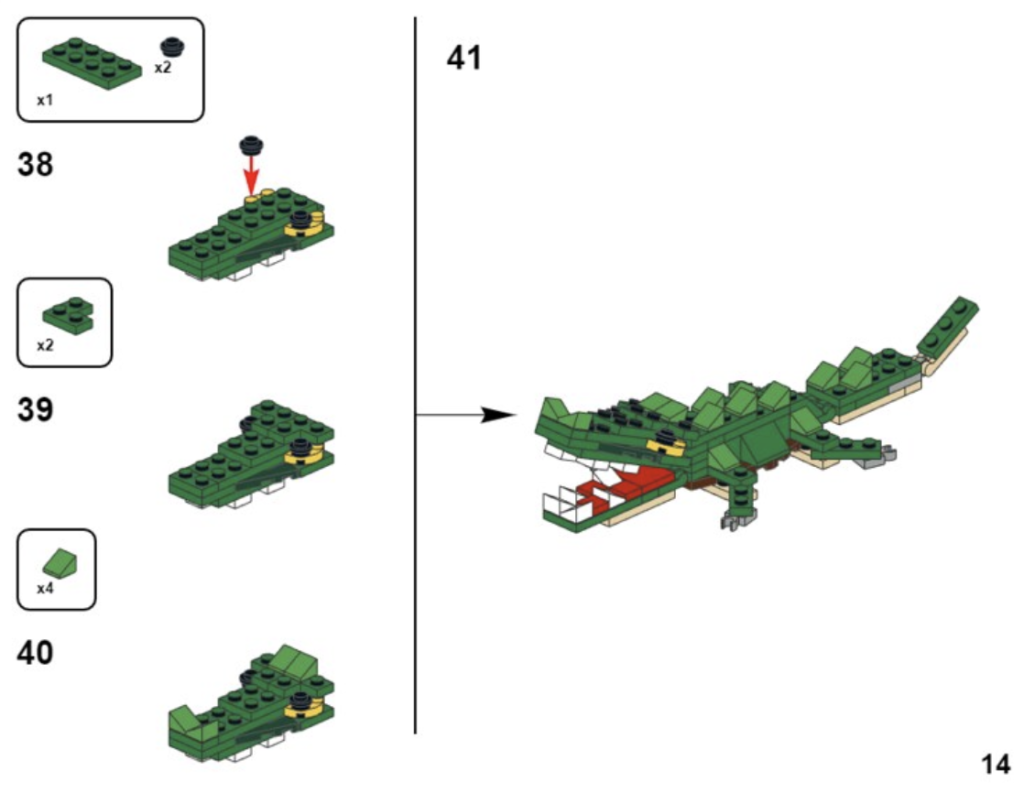

The power of this took some time to emerge. Because the API on top of ETH is permissionless (like Bitcoin) but infinitely programmable, it became possible to create a series of building block components which essentially pipe ETH from one to another to accomplish useful work for the end user.

In the default world, this would require, for example, a big bank to negotiate contracts and API access with each of the individual providers. On blockchain, each of these components were independently created by developers and quickly scaled to handling millions of dollars of throughput and holding over $1B of value in early 2020.

For example, let’s start with Dharma, a wallet that allows users to store digital tokens and earn interest on those balances. This is a fundamental use case of traditional banking. The developers of Dharma generate that interest for their users by pulling together multiple components that were created on top of Ethereum. For example, users’ dollars are converted to DAI, an Ethereum-based “stable coin” which maintains the value of the US dollar, then piped into Compound, a protocol which lends these balances, thus earning users instant interest on their balances.

Implications of Open Finance

The key takeaway is that a real consumer-facing product was created by relying on a stack of components which were each created by a different team and which were used without asking for any permission or API keys and now handle millions of dollars of value. It is almost like open source software but, whereas open source software relies on downloading a copy of a particular library again for each implementation, open components are only deployed once and then anyone can just call that specific instance to access its shared state.

Each of the teams who created those components can rest easy knowing that they won’t become liable for any oversized EC2 bills because someone is abusing their API — the metering and charging for use of these components is done intrinsically as part of the chain.

Performance and Tuning

Ethereum operates with similar parameters as Bitcoin, just slightly faster (blocks propagate about 30x faster) and slightly cheaper (instead of roughly $0.50 transaction cost on Bitcoin, it costs about $0.10[2]). This has allowed them to maintain comfortable security while allowing slow speed apps like asset management to flourish.

But, as a first-generation bare-metal technology, the Ethereum network has been brought to its knees in times of stress and suffers from a maximum 15-transaction-per-second bottleneck. This performance gap has left Open Finance stalled as a niche proof-of-concept. We are stuck with slow-moving assets similar to how the global financial system operated in the analog age of paper checks and telephone confirmations because Ethereum has less computing power than a 1990 graphing calculator.

Ethereum showed a hint of the power of composable components for financial use cases but also opened the door to a much broader set of use cases, called the Open Web.

Third Generation: Open Web

Now anything which has value can acquire the properties of money, merging the Internet with Open Finance to create the Internet of Value and the Open Web.

We saw previously that “Open Money” encompasses a wide spectrum of different use cases and how a next generation technology, Ethereum, made Open Money far more useful by creating the permissionless composability of “Open Finance.” Now, we’ll see how one more generation of technology expands the possibilities of Open Finance even further to unlock the true potential of Blockchain.

Fundamentally, all the “money” we’ve been talking about is just a line item of data stored on the blockchain with its own open API. But the database can store anything.

While the nature of blockchain makes it best suited for elements that have meaningful value, the definition of “meaningful value” is highly flexible. Any data which is potentially valuable to other people can be set free by “tokenizing” it. “Tokenization” in this context is the process by which an existing asset — not a newly generated one like Bitcoin — is transferred on to the blockchain and provided with the same sort of permissionless API that Bitcoin and Ethereum have. As with Bitcoin, this allows for the option of globally enforceable scarcity (whether 21 million or just one)

Consider community projects like Reddit where users earn online reputation in the form of “Karma”. Then consider projects like Sofi where a wide range of signals are used to evaluate whether someone should be approved for a loan. In today’s world, if a hackathon team building the next Sofi wanted to incorporate the Karma score into their credit algorithm, they’d need to negotiate a bilateral agreement with the Reddit team to acquire certified API access. If Karma is tokenized, they have all the tools they need to integrate with Karma and Reddit never needs to know about it — they simply benefit from more users who want to grow their Karma because it’s useful around the world.

Even further, 100 different teams at the next hackathon can figure out new ways to take this asset plus dozens more and create a new set of publicly reusable components or build new consumer-facing applications from them.

This is the vision of the Open Web.

Just like Ethereum enabled the easy piping of high value money through permissionless components, any asset which has been tokenized can be piped through a similar set of components and “spent”, exchanged, collateralized, modified or otherwise interacted with as per the specification of its open API.

Tuning for the Open Web

The Open Web isn’t fundamentally different from Open Finance — the first is really a superset of the second — but the expansion of Open Web’s use cases requires a substantial uptick in performance and also the capability to reach a new tranche of potential users.

The key requirements for a platform to drive the Open Web are:

- Higher volume, higher speed, lower cost transactions — because the chain is no longer just handling slow-moving asset management decisions, it needs to scale to support more nuanced data types and use cases.

- Usability — because the use cases will cross into consumer-facing applications, it’s critical that the components developers build or the apps on top of them allow for smooth end-user experiences, for example when they’re provisioning or linking accounts to various assets or platforms while still preserving user ownership of their data.

These specifications have not been met by any platform before because they are enormously complicated. It has taken years of research to arrive at the point where new consensus mechanisms merge with new runtimes and new scalability techniques while still maintaining the performance and security needed to maintain a backbone of monetary assets.

The Open Web Platform

There are dozens of blockchain projects coming to market this year which have tuned their platforms to address various subsets of the Open Money and Open Finance use cases. Given the limitations of the existing technology, it made sense for them to optimize for a specific niche.

NEAR is the only chain which has deliberately advanced its technology and tuned its performance characteristics to meet the full needs of the Open Web.

NEAR merges scalability approaches from the high performance database world with advances in runtime performance and years of progress on usability. Like Ethereum, it has a full virtual machine built on top of a blockchain but the underlying chain adjusts its capacity to meet demand by dynamically splitting computation into parallel processes (sharding) while still maintaining the security needed to keep the data safe.

This means that the full set of possible use cases can be built on NEAR — fiat-backed coins that give global access to stable currency, Open Finance tools that scale up to complex financial instruments and out to everyday people, and Open Web applications that integrate all of this to power everyday commerce and interaction.

Outro

The story of the Open Web is just beginning because we’ve only just developed the requisite technologies to bring it to its proper scale. With that milestone achieved, the future will be driven by the innovation that can be achieved on top of this new stack and how well supported are the developers and entrepreneurs who represent its leading edge.

For a sense of the potential impact of the Open Web, note the cambrian explosion that occurred when the early Internet, after several challenging years, developed the necessary protocols to finally allow consumer spending of money online in the late 1990s. The ensuing 25 years have seen web-based commerce grow to generate over $2 trillion in spending each year.

Similarly, the Open Web expands the scope and reach of the financial primitives of Open Finance and enables their inclusion in business- and consumer-facing applications in ways that we can guess but certainly not predict.

[1]: Bitcoin Script does allow some programmability but this is quite limited.

[2]: Both are based on an auction, so all numbers are approximate.

Share this:

Join the community:

Follow NEAR:

More posts from our blog

NEAR Launches Infrastructure Committee with $4 Million in Funding